Better investors’ movement, prevaricating against inflation, retail buying social sentiments, IPOs and crypto ETF, and other data points allow funds to switch the bull for instable profit margins.

Here, we will discuss about:

- Different data sets that big finance is utilizing for real profits.

- The future of data in cryptocurrency market.

- The upsurge of crypto in the recent times.

The Explosion of Crypto in Present Times

In 2020 and in Q1 of the year 2021, the value of cryptocurrency has blown up with Bitcoin leading the way.

In case, you are looking for other conservative commodities including gold and indexes like P or S 500, you might believe that Bitcoin has nearly tripled in this year’s collection alone!

Leading Factors

Let’s analyze the leading factors responsible for the increase:

Panic of Coronavirus

As unemployment rates are rolled in, suspicions about long-term predictions of the social separation have affected the businesses and concerns over sanctioning currency inflation – because investors were determined about commodities that could help in storing the values.

Formal Interests

Alt-coins, particularly Bitcoin have taking place in getting main institutional interests. Among the most vital was Tesla’s attainment of $1.5 Billion Bitcoins and MassMutual that has capitalized $100 million.

Payment Systems

Many key businesses are taking payments in cryptocurrency like Etsy, PayPal, and Starbucks. This extensive approval in retail impulses circulation volumes and positive pricing influences.

Different alt data sets that big finances are utilizing for real-time profits in case you are:

- A quant funding developer in need of creating crypto trading bots

- Any hedge fund looking for real-time crypto movement data to help trigger trades

- Coping with institution research and publishing policy papers about cryptocurrency regulations.

Let’s understand a few data points that you want to remember as well as include in these models:

Social Sentiments

Influencers or ‘Whales’ in crypto phraseology, are people that cryptocurrency investors are searching for to have inspiration where which coins you need to invest in or when to buy and sell. Getting the listing of cryptocurrency influencers as well as also gathering:

- Currency Mentions

- Total Followers

- Sharing News Links

- Real Posts

- Post Volume

- List of Different Social Media Accounts

- Engagements

Will all these help you in discriminating socially-driven valuation movements? E.g. if you have collected social mentions of Etherium on a given ‘Whale's account - on an average, you would see about 20 daily coin mentions. On a given day, you may choose 30 mentions or 50% increase in whale interests and feel the upsurge in Etherium evaluations. While establishing all these, ‘social signals’ may help in triggering buying or selling orders before major movements.

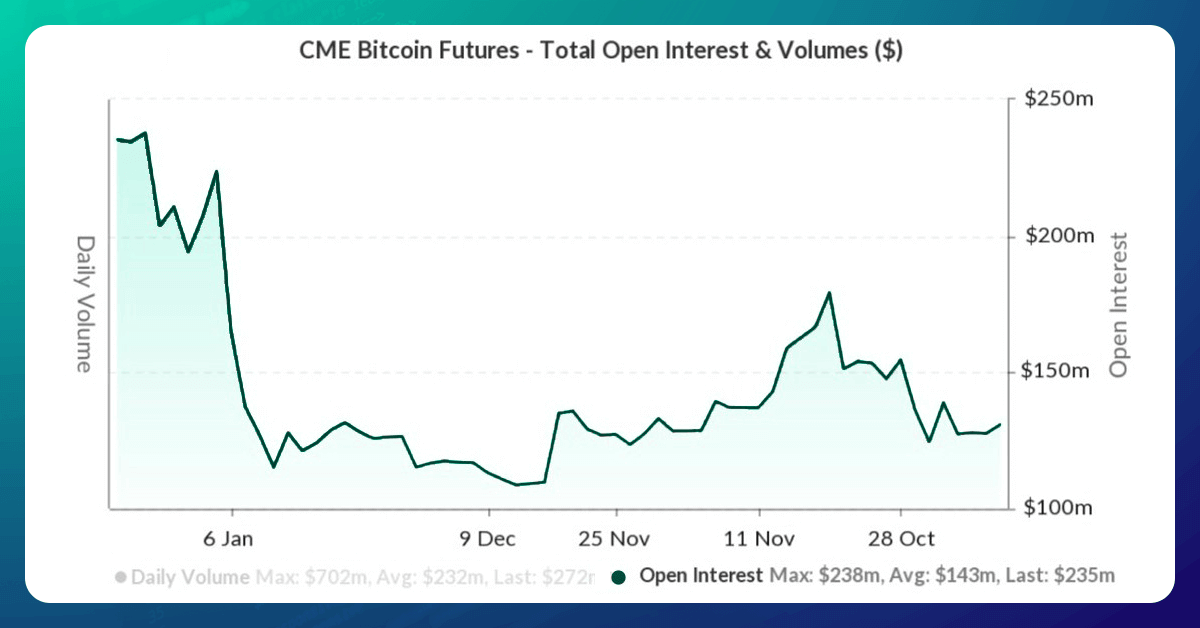

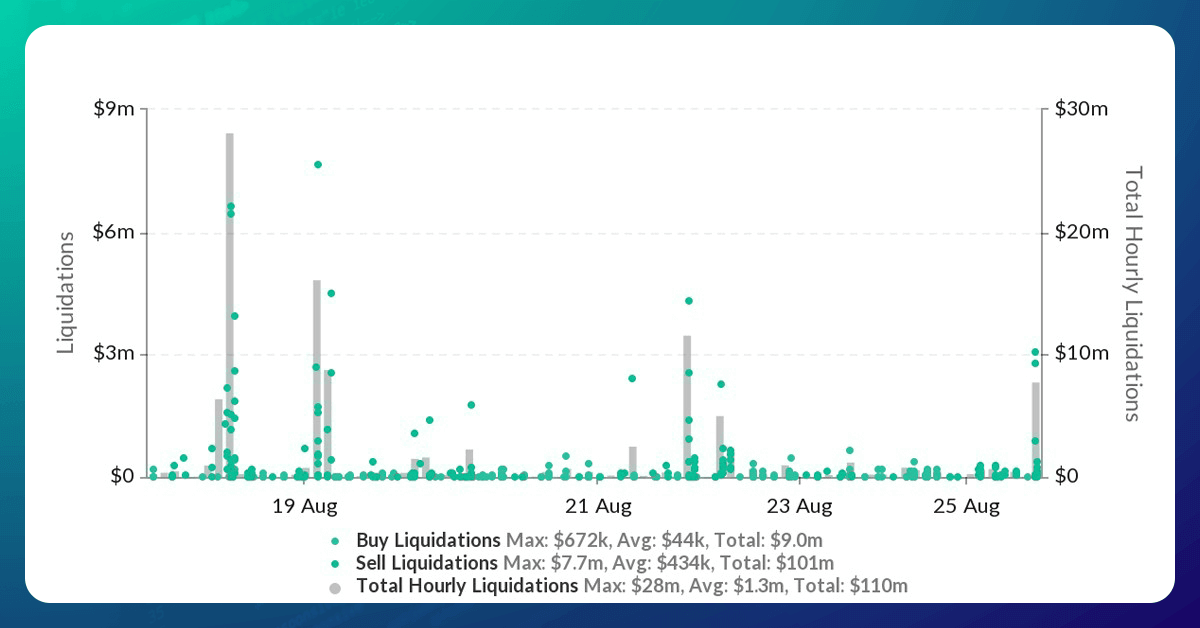

Coin Monitoring

More then social feelings, you would also require to gather data about all particular coins’ present as well as historical fluctuations and metrics. This could help you and your team in creating charts, which help you to easily view quarterlies like Highs, Lows, as well as unpredicted volatile swings.

You might successively collect news data points, which would result in several bits about Tesla’s investments of $1.5 billion within this class. This might result in the analysts having a trigger in trading models as per the the acquisitions of alt-coins using institutional investors or big corporate bodies.

Data in Cryptocurrency Market: The Future

As cryptocurrencies have become very popular among investors as well as consumers, there might be numerous areas where collecting data might play a very important part.

One example of this is a few central banks, predominantly in the US, might try and execute monetary regulations or legislation, as well as U.S. Treasury Secretary is putting efforts on the cryptocurrency regulations - getting live data feeds having governmental updates might be among the significant requirements of this digital currency’s agents.

Other examples include companies that offer:

- Taxation

- Reporting

- Online Analysis

- Investment Services

for cryptocurrency traders. These companies would need to have live cryptocurrency data feeds powering tools, dashboards, SaaS applications, and also would be looking for data gathering networks that can offer the goods.

How Web Screen Scraping Can Be Helpful?

At Web Screen Scraping, we assist you in extracting cryptocurrency data from a cryptocurrency market according to your requirements. We extract all data associated to cryptocurrency at reasonable prices. For more information, contact Web Screen Scraping or ask for a quote!

Comments

Post a Comment