|

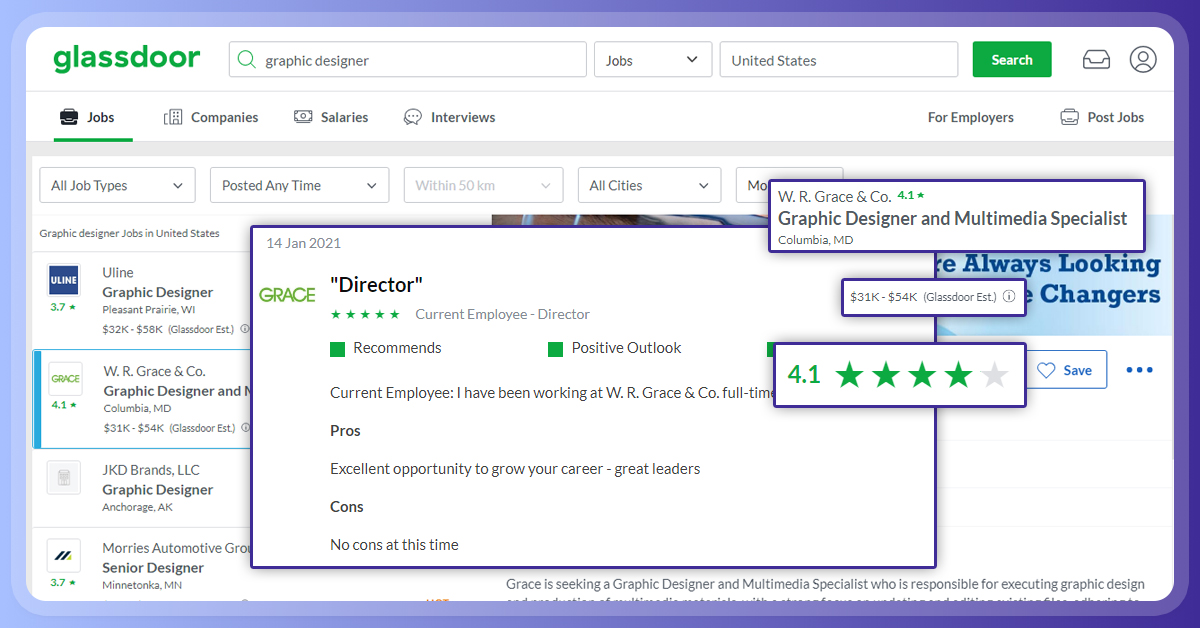

This Blog is related to scraping data of job listing based on location & specific job names. You can extract the job ratings, estimated salary, or go a bit more and extract the jobs established on the number of miles from a specific city. With extraction Glassdoor job, you can discover job lists over an assured time, and identify job placements that are removed &listed to inquire about the job that is in trend.

In this blog, we will extract Glassdoor.com, one of the quickest expanding job hiring sites. The extractor will scrape the information of fields for a specific job title in a given location.

Below is the listing of Data Fields that we scrape from Glassdoor:

- Name of Jobs

- Company Name

- State (Province)

- City

- Salary

- URL of Jobs

- Expected Salary

- Client’s Ratings

- Company Revenue

- Company Website

- Founded Years

- Industry

- Company Locations

- Date of Posted

Scraping Logics

First, you need to develop the URL to find outcomes from Glassdoor. Meanwhile, we will be scraping lists by job location & name, here is the list to search Android developer in Massachusetts and Boston —

Download HTML to find the outcomes pages utilizing Python Requests.

Analyze the page utilizing LXML — LXML helps you to route the HTML Tree Structure utilizing different Xpaths. We deserve to pre-build the Xpaths for the information which we required in the code.

You need to save all the information into a CSV folder. In this blog, we are one and only extracting companies that scrape data like name, job name, job locations, and expected salary from the primary page of outcomes, so the CSV file is sufficient for all the required details. If you want to scrape all the data in a huge amount, then a JSON file would be more convenient.

Necessities

Install Pip & Python 3

Here we will show you how Python 3 is installed in Linux —

http://docs.python-guide.org/en/latest/starting/install3/linux/

Mac customers can trail this —

http://docs.python-guide.org/en/latest/starting/install3/osx/

Windows users can follow this guide —

https://phoenixnap.com/kb/how-to-install-python-3-windows

Packages

For the Web Extraction Article utilizing Python 3, we require some packages for parsing & downloading the HTML. Here are the packages needs:

PIP to mount the required package in Python ( https://pip.pypa.io/en/stable/installing/)

Python Needs, to take over the HTML content &make requests of the pages. (http://docs.python-requests.org/en/master/user/install/)

LXML, for analyzing the HTML Tree Structure Utilizing Xpaths. (http://lxml.de/installation.html)

The Code

For more information, you can click on the given below link: -

https://www.webscreenscraping.com/contact-us.php

Running the Scraper

The title of the writing is glassdoor.py. If you write script title in command prompt or terminal with a -h

usage: glassdoor.py [-h] keyword place

positional arguments:

keyword job name

place job location

optional arguments:

-h, — help show this help message and exit

The keyword signifies a keyword linked to the job you are finding an argument “place” is utilized to search the preferred job in a particular location. The sample displays how to route the script to search the listing of Android developer in Boston:

python3 glassdoor.py "Android developer" "Boston"

This may help you to make a CSV folder called Android developer-Boston-job-results.csv that remains in a similar file as the script. Here are some scraped data from Glassdoor in a CSV folder from the given order above.

If you want to download the code, then you can contact the below-given link

https://www.webscreenscraping.com/contact-us.php

Conclusion

This extractor must work for scraping maximum job lists on Glassdoor if the site structure changes unbelievably. If you like to extract the data of millions of pages in a very less period, this extractor may not work for you.

Comments

Post a Comment